[Case Study] How to Achieve Better Inbox Placement

Estimated reading time: 4 minutes

Email spam filters evaluate a variety of factors when determining where to deliver an email. It is known that the email content, sender reputation, email authentication, and user behavior influence the email placement.

While analyzing the clients’ email test reports in GlockApps, sometimes we noticed a difference in the Inbox placement ratios for the same message in the manual and automatic tests. Provided that the message was sent to the seed list from the same email account and mail server, we wondered what could cause different Inbox placement percentages.

After further investigation of the client’s reports, it was discovered that the approaches to sending the email to the seed list were different. In the manual test, the client sent the message by putting all of the seed addresses in the email’s To: field. In the automatic test, GlockApps sent the message using one email address in the To: field. Additionally, the client set a delay between the messages in 3 seconds.

This finding gave us ground for further testing. With that in mind, we ran our own tests to see if the way the message was sent could actually influence where it was delivered. Below we’re sharing our experience.

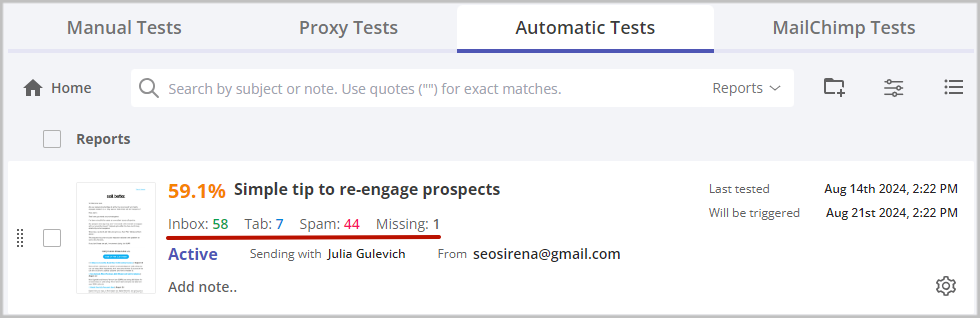

Running Automatic Inbox Placement Test

In GlockApps, we created a sending account and set up an automatic test. We used all of the available spam filters and mailbox providers for the test.

The testing environment was:

Message Subject: Simple tip to re-engage prospects

“From” email: a gmail.com email address

Sending account: Gmail’s SMTP server

Seed emails: 110

Sending mode: individual message to each seed address with a 3-second delay between the messages

When the automatic test finished, we ran a manual test for the same message.

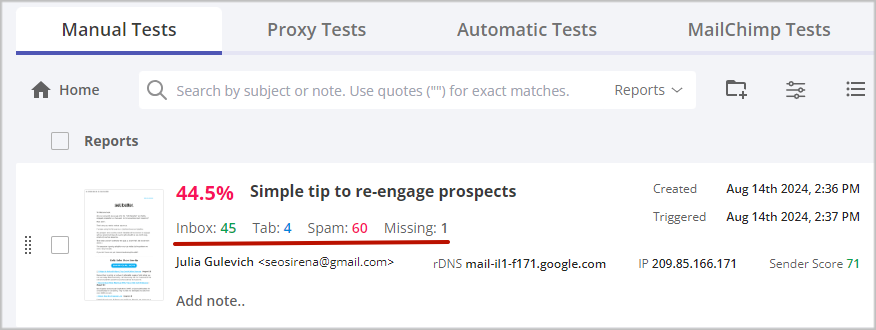

Running Manual Inbox Placement Test

Now, let’s look at the manual Inbox Insight test.

The testing environment was:

Message Subject: Simple tip to re-engage prospects

“From” email: a gmail.com email address

Sending account: Gmail’s SMTP server

Seed emails: 110

Sending mode: 110 seed addresses in the email’s To: field

Analyzing Inbox Placement Results

Comparing the two reports, we got these numbers:

| Email placement | Automatic test | Manual test |

| Inbox | 58 | 45 |

| Tab | 7 | 4 |

| Spam | 44 | 60 |

| Missing | 1 | 1 |

The meanings of the email placement items are:

Inbox – the number of messages delivered to the Inboxes of the seed email accounts;

Tab – the number of messages delivered to a tab different from the primary inbox of the seed email accounts (for example, Gmail’s Promotions tab);

Spam – the number of messages delivered to the Spam or Junk folder of the seed email accounts;

Missing – the number of undelivered messages (might have been blocked or rejected).

Conclusion

Our case study demonstrated that the way the message was sent to a mailing list might make a difference for where the email was delivered: Inbox or Spam. When you send an email using a lot of addresses in the To: or BCC: field, you may end up with more Spam placements because spam filters may consider this sending as a bulk email campaign.

When you run a manual Inbox Insight test in GlockApps, it is recommended to follow these practices:

1. Create a new list (group, audience) in your email service provider.

2. Import the seed list into the new list (group, audience).

3. Send the message with the test id snippet to the list (group, audience) with the test email addresses.

Avoid, when possible, sending the message to the seed list using all of the emails in the To: or BCC: field.

If you cannot avoid this, it is recommended to:

1. Split the seed list into small lists (20-30 emails on each).

2. Send the same message with the same id snippet to each small list as a separate mailing.